AUSTIN, Texas—A team of engineers and psychologists at The University of Texas at Austin has received $1.2 million from the National Science Foundation to develop a visual search system capable of finding objects in cluttered environments.

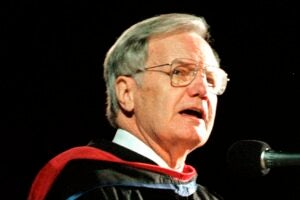

Dr. Alan C. Bovik, electrical engineering professor, and the project’s other principal investigators, Drs. Larry Cormack, Bill Geisler and Eyal Seidemann, all psychology professors at The University of Texas at Austin, will research the methods used by humans to search for objects to understand the process used by the human eye. The group will then create mathematical algorithms for use in software and companion hardware capable of visually searching like humans.

“The most obvious example would be a robot with eyes,” Bovik says. “You’d tell it to look for your shoes, and it would find them and bring them to you. The concept is science fiction right now, but could be science fact farther in the future.”

One part of their research will develop a revolutionary camera gaze control device called Remote High-Speed Active Visual Environment, or RHAVEN. Attached to a camera, the device would “look around” as a human does—by lowering the resolution of the eye’s peripheral vision, therefore lowering the amount of information the brain must process. Called a foveated visual system, this is Mother Nature’s method of allowing the eye to use information from its periphery to decide where to look next.

“The development of artificial systems that actively gather, interpret and act upon visual information like humans would be perhaps the single largest leap imaginable in information technology,” Bovik says.

The researchers want to statistically quantify where humans look when searching, to create a search system that’s “bottom-up.” Engineers use “top-down” systems that are not yet very successful—for example, the system sees a face and tries to model it mathematically. A bottom-up system looks for the simplest things that attract the eye’s attention, such as a bright light or movement. Bovik’s group asks research subjects to wear an “eye tracker,” which measures where the participants look.

“If we know what they’re looking at and where they’re looking,” Bovik says, “we can measure the local image statistics at the point of gaze and make quantifiable statements about the kind of structure drawing the eye. No one else is doing this kind of research, and no one ever has before.”

Bovik sees the results of his research having helpful applications in medical diagnostics as well. He says, for example, most doctors miss about 10 percent of breast tumors appearing on a mammogram. However, a few physicians consistently identify the most elusive tumors. By documenting the methods used by these elite few, he hopes to create a machine to serve as a “physician’s assistant” which scans mammograms initially, then shows the result to the doctor as an additional cue.

“It’s just another lifesaving possibility stemming from this technology,” says Bovik.

Bovik is the Cullen Trust for Higher Education Endowed Professor.

For more information contact: Becky Rische, College of Engineering, 512-471-7272.