What factors drive climate change? How did galaxies form after the Big Bang? Have we reached a point in medicine where doctors can customize patient-specific treatments to illness? What will we discover in the vast streams of data flowing through telescopes, sensors and large particle colliders?

The answers to these “grand challenge” questions are vital to our health, our quality of life and security, and our understanding of the universe. With persistent advances in computing technologies and expertise, researchers are beginning to get to the heart of these problems.

And now they have an unprecedented tool to extend knowledge and compute answers to these critical questions.

|

|

“Ranger” Principal Investigator Jay Boisseau, and Co-Principal Investigators Karl Schulz, Tommy Minyard and Omar Ghattas (not

pictured) brought the 504 teraflop supercomputer to The University of Texas at Austin where it will help the nation’s scientists address some of the world’s most challenging problems. |

“Ranger,” the most powerful computing system in the world for open scientific research, entered full production on Feb. 4 at the Texas Advanced Computing Center (TACC), a leading supercomputing center at The University of Texas at Austin. Ranger’s deployment marks the beginning of the Petascale Era in high-performance computing-where systems will approach a thousand trillion floating point operations a second-and heralds a new age for computational science and engineering research.

“Ranger is so much more powerful than anything that’s come before it for open science research,” Jay Boisseau, director of TACC, said. “It will be the first time researchers in many disciplines will be able to conduct simulations they have been planning in some cases for many years.”

To Boisseau, high-end computing centers are as fundamental to major research universities in the 21st century as libraries were in the 19th and 20th centuries.

“They provide resources that attract researchers and allow them to do the most interesting research on challenging, often groundbreaking, problems, and that’s what they’re really in it for,” Boisseau said.

With the ability to model complex systems on a scale never available before, scientists and engineers across all fields are expecting significant breakthroughs that expand the research universe and solve “grand challenge” questions affecting society.

Optimized for Science

In the public imagination, supercomputers – known today as high performance computing (HPC) systems – bring to mind grainy photos of room-sized mainframes, blinking lights and spinning tapes. And on the surface, Ranger fits this image. Rows of new racks, housing 62,976 microprocessor cores, fill a 6,000-square-foot machine room in TACC’s new building at the J.J. Pickle Research Campus. The roar of the system suggests the massive power available to work on the most intractable science and engineering questions of our time. But Ranger only superficially resembles the supercomputers of earlier eras.

|

Definitions:

|

With a peak performance of 504 teraflops, Ranger is 50,000 times more powerful than today’s PCs, and five times more capable than any open-science computer available to the national science community.

Funded through the National Science Foundation (NSF) “Path to Petascale” program, Ranger is a collaboration among TACC, The University of Texas at Austin’s Institute for Computational Engineering and Science (ICES), Sun Microsystems, Advanced Micro Devices, Arizona State University and Cornell University. The system will enable the leading researchers in the country to advance and accelerate computational research in all scientific disciplines. The $59 million award, which covers the $30 million system and four years of operating costs, was announced in fall 2006. The investment marks NSF’s renewed commitment to leadership-class high-performance computing, ensuring the U.S. remains a leader in petascale science.

Ranger greatly enhances the computing capacity of the NSF TeraGrid, a nationwide network of academic HPC centers that provides scientists and researchers access to large-scale computing power and resources. This collaborative network integrates a distributed set of high-capability computational, data-management and visualization resources to make research more productive. With science Web portals and education programs, the TeraGrid also connects and broadens scientific communities. Ranger serves as the largest HPC computing resource on the TeraGrid, and offers more computer cycles for calculations than all other TeraGrid HPC systems combined.

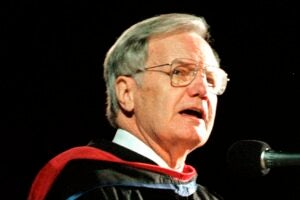

“Ranger is an incredible asset for The University of Texas at Austin, for the national scientific community and for society as a whole,” William Powers Jr., president of The University of Texas at Austin, said. “That the National Science Foundation has entrusted us with this vital scientific instrument for research is a testament to the expertise of the staff at TACC, who are among the most innovative leaders in high performance computing.”

|

The end of the cosmic Dark Ages.This supercomputer simulation shows how gravity assembles one hundred million solar masses of dark matter (top row) and gas (middle row) into the first galaxy. Each column (from left to right) CREDIT: T. H. Greif, J. L. Johnson, V. Bromm (UT Austin, Astronomy Department); Ralf S. Klessen (Univ. of Heidelberg); TACC |

The award for Ranger represents the largest NSF grant ever given to The University of Texas at Austin.

Each year, Ranger will provide more than 500 million hours of computing time to the science community, performing well over 200,000 years of computational work over its lifetime.

Attaining the Unattainable

Every day, we reap the benefits of high-performance computing as it lays the foundation for a technological world. Weather predictions, vehicle crash tests, subsurface oil exploration, airplane design and even dancing animated rats are simulated on massively parallel HPC clusters. Look around and you’ll see products of high-end computational design everywhere.

But how do HPC systems help us explore the Big Bang? Understand the dynamics within the neutron of an atom? Or predict tomorrow’s weather? Although these phenomena occur in the natural world, they are most effectively explored through computational science. This interdisciplinary field uses mathematical models, numerical analysis and visualization to study problems too small, large, complex, dangerous, expensive or distant to explore experimentally.

Computational science has grown into the third pillar of scientific study, complementing theory and experimentation, and proving to be vital in the search for practical and fundamental knowledge. By converting the algorithms that govern the natural world into mathematical models and computer codes, and sub-dividing those problems into smaller modules that can be solved simultaneously, HPC systems resolve problems in weeks or months that a researcher working on a desktop or a small university cluster would need decades to solve.

“The conduct of research depends on intellectual expertise, but it also depends on the instrument needed to apply that expertise,” Boisseau said. “Increasingly, high-end computing is an essential instrument.”

Over time, researchers from fields as diverse as psychology, biochemistry, quantum physics, cosmology and geology have recognized the unique usefulness of computational methods, and have applied them to a wide range of research questions.

“Astronomy wouldn’t exist without computation,” asserted Dr. Volker Bromm, assistant professor of astronomy at The University of Texas at Austin. Bromm relies on HPC systems to peer deeper into the past than is possible with even the most powerful telescopes, and thereby piece together a history of the universe.

“The advance of supercomputers means you can ask more ambitious questions and put more physics into your models,” he said. “It’s all driven by supercomputers.”

|

|

The original “Lonestar” was a Cray T3E with a peak of 0.05 teraflops. In 2003, TACC installed “Lonestar II” a Dell

Linux cluster, that at six teraflops, was one of the fastest academic supercomputers at the time. In 2006, TACC upgraded to Lonestar III with a peak of 62 teraflops, which again made it the most powerful academic supercomputer in the world. Ranger is TACC’s third deployment of an HPC system that is a “step-function” increase over existing systems. |

Only the scale of the systems and the amount of access available limits the far-reaching discoveries enabled by computational science. However, academics in the U.S. have been starved in terms of the number of HPC cycles available to the community, Karl Schulz, TACC assistant director and chief scientist on Ranger, said.

“We have very good computational scientists,” he said, “but they were competing for a limited number of cycles. Now, you have this huge step-function with Ranger, which provides more capacity than all of the other systems in TeraGrid combined.”

“The computational science community has been advocating for petascale machines for a decade,” said Omar Ghattas, professor of geological sciences and mechanical engineering and director of the Center for Computational Geosciences at ICES. “We’ve been making the case for the scientific breakthroughs that will be engendered by the petaflop computing age. It’s taken a long time, but NSF has now responded. With the petascale acquisitions program, NSF is investing in high-end supercomputers in a big way.”

Ghattas, a co-principal investigator of the Ranger system, leads an early user team that will produce the highest resolution models of convection in the Earth’s mantle to date, enabling a better understanding of the evolution of tectonic deformation. Their work is emblematic of how larger HPC systems allow for more accurate simulations, finer-grained models, shorter time to results, better statistical analysis, higher-resolution visualization – in other words, bigger, better science.

“The huge numbers of processors that are available on Ranger make it an incredible tool,” said Peter Coveney, a renowned professor of physics at University College London, who will use Ranger to model mutations in HIV proteins. “If you have the problems, or you can think of ways of using that scale of machine – and we certainly can – then you can have a field day.”

A History of Success

Few would have guessed 10 years ago that TACC would be chosen to design, build and maintain the world’s most powerful computer system for open science research. But Ranger reflects the long-term vision of Boisseau and a string of several successful deployments stretching back to 2002 that established TACC as the innovating underdog of the HPC community.

“When I got here in June 2001, we weren’t well known nationally, or even on our own campus,” Boisseau recalled. “But we developed a vision for leadership in supercomputing, everyone bought into a common goal and strategy, and we’ve accomplished what seemed to be impossible: from non-competitive to “national champions” in six years.”

Each year from 2002 to 2006, TACC won grants for new or upgraded systems, steadily building its resources, services, experience and expertise.

|

Understanding HIV drug-resistanceA snapshot of the HIV-1 protease (a key protein that is the target for the protease inhibitor drugs) from a computational simulation. Mutations from the “wildtype” can occur within the active site (G48V) and at remote locations along the protein chain (L90M ). The “asp dyad” is at the centre of the active site, where polyprotein changes are snipped by the enzyme; this is the region that any drug must occupy and block. CREDIT: Peter Coveney, University College London |

“Within a year and a half of arriving at TACC,” Boisseau said, “we were deploying a world-class cluster. It was six teraflops, but that was a world-class system at the time.

“We all wanted to be a part of changing the world through deploying the most powerful resources here at The University of Texas at Austin, and helping people around the state, nation and world to take advantage of them. Everybody did whatever it took to acquire the skill and expertise needed to be competitive in proposals to acquire the next system.”

Ranger’s introduction marks TACC’s steep climb through the ranks of the HPC world and the realization of Boisseau’s bold vision. The project brought together not only technology partners Sun and AMD, but also ICES, the Cornell University Center for Advanced Computing and the Arizona State University High Performance Computing Institute, who are providing innovative application development strategies, data management technologies and training opportunities.

“The Ranger system is the first of a new series of NSF ‘Track 2’ investments intended to provide a quantum leap in computational power necessary for transformative discovery and learning in the 21st century,” said Daniel E. Atkins, director of the Office of Cyberinfrastructure at the NSF. “I congratulate and thank TACC, Sun and AMD on their accomplishments to date and their commitment to providing excellent leading-edge cyberinfrastructure services for the nation’s research communities.”

Bigger, Better, Different Science

So what will Ranger be computing? With so much capacity, TACC staff anticipates several studies that were considered groundbreaking a year ago will be conducted simultaneously on Ranger, speeding the pace of discovery and spreading the benefits throughout society.

A national committee of scientists spanning scientific research domains allocates 90 percent of the computing time on Ranger to the premier computational scientists in the country, based on which projects are most likely to use Ranger’s resources efficiently and derive the most significant results.

“From weather prediction and climate modeling, to protein folding and drug design, to new materials science, to cosmological calculations, to you name it,” Boisseau said.

|

Modeling Mantle ConvectionA sequence of snapshots from a simulation of a model mantle convection problem. Images depict rising temperature plume within the Earth’s mantle, indicating the dynamically-evolving mesh required CREDIT: Simulations by Rhea Group (Carsten Burstedde, Omar Ghattas, Georg Stadler, Tiankai Tu, Lucas Wilcox), in collaboration with George Biros (Penn), Michael Gurnis (Caltech), and Shijie Zhong (Colorado) |

Ten percent of Ranger’s time is allocated by TACC, with five percent going to research projects from Texas higher education institutions, and five percent going to the Science and Technology Affiliates for Research (STAR) program, which helps industrial partners develop more advanced computational practices.

Early users tested Ranger with great success, performing landmark simulations in a fraction of the time previously needed. In the past, an allocation of one million computing hours was considered generous. Now, top projects will run up to 25 million computing hours in 2008, with more time possible in the future.

The leap in available computing time makes a significant difference in the quality and quantity of research that can be performed. It allows for more grid points in models of the solid Earth, more gas particles in visualizations of the early universe, higher resolution images of the HIV proteins interacting with drug molecules and more atoms in predictions of new materials with the potential to make the world a greener, safer, healthier place.

While Ranger undoubtedly provides a capacity boost to researchers, increasing the accuracy and scope of existing projects, it also is a stimulus to new questions, new approaches and a more probing analysis of the physical world.

“We and all the other groups that depend largely on supercomputer centers are going to see a big increase in the time available,” said Dr. Doug Toussaint, a professor of physics at The University of Arizona. “When this has time to sink in, we’ll all be doing more accurate work and perhaps approaching problems that we wouldn’t have thought of trying before.”

The order of magnitude increase in computing time also means simulations are no longer limited to a single, make-or-break run.

“Someone’s going to get to run a landmark case, and then they’re going to look at the output and see if they can do better,” Schulz said. “That’s going to lead to breakthrough science because it’s the first time that researchers will be able to run at such a large scale and be able to do it with frequency.”

With one of the lowest cost-per-flop ratios of any high-end system, Ranger is seen as a tremendous economic stimulator, propelling leading-edge science and technology forward and keeping the U.S. competitive. If petascale resources open up brand new avenues of research, as is believed, NSF’s funding will be repaid with breakthrough discoveries far in excess of their price tag.

Designed for the Future

Scientists say there is another important reason to build systems of this scale. Ranger provides a research and development platform for future technologies that will affect us all. Just as today’s laptops are more powerful than the fastest supercomputers 20 years ago, so too will the computers of tomorrow be far more powerful than Ranger. As massive parallel computer architectures of the kind seen on HPC systems become commonplace in the coming years, the work of optimizing the interactions among tens of thousands of processors has great social and economic importance.

“It looks like a lot of what scientists are doing is esoteric and limited to a niche area,” Ghattas said. “But the reality is that developing new mathematical models, new computational algorithms, new software implementations that can best harness these thousands of processors-that technology trickles down.”

The trickle-down effect extends the benefits of Ranger from a small community of leading-edge scientists to the public, who will inherit the solutions developed on these high-end, specialized systems.

After lobbying for a system of this scale for more than a decade, researchers now have a tool to catalyze and accelerate discovery throughout science.

“This is going to pay tremendous dividends to the computational science community,” Ghattas said. “But now, the ball is in our court. We have to deliver.”

“We have an obligation to ensure that Ranger is a tremendously useful investment,” Boisseau said. “We have no doubt that over its four-year life cycle, a massive amount of research will be enabled by this system. It is our responsibility to make sure that the system is as effective as possible for groundbreaking research on a daily basis.”

Ranger will be officially dedicated in a ceremony on Feb. 22.

By Aaron Dubrow

Texas Advanced Computing Center

Photos: Christina Murrey