The Ranger supercomputer at the Texas Advanced Computing Center (TACC) today celebrates two years of enabling groundbreaking computational science as a leading system for researchers in Texas and across the nation via the National Science Foundation’s (NSF) TeraGrid initiative.

At 579.4 teraflops, Ranger was the first system in the NSF “Path to Petascale” program and remains among the top 10 systems on the supercomputing “TOP500” list two years later because of its extraordinary scale. Ranger is also one of the top systems in the world in terms of total memory at 123 terabytes.

“We’re proud of the fact that Ranger has been so widely requested and used for diverse science projects,” said Jay Boisseau, principal investigator of the Ranger project and director of TACC. “It supports hundreds of projects and more than a thousand users-and you don’t attract that many projects and researchers unless you’re running a great, high-impact system. Ranger is in constant demand, often far in excess of what we can provide.”

Ranger is a Sun Constellation cluster composed of 15,744 quad-core AMD OpteronTM processors and runs the Linux operating system. “Ranger has changed people’s perceptions of Linux clusters as supercomputers,” said Tommy Minyard, a co-principal investigator on the project and director of Advanced Computing Systems at TACC. “They are now widely accepted as a large-scale computing technology.”

Since Feb. 4, 2008, Ranger has enabled 2,863 users across 981 unique research projects to run a total of 1,089,075 jobs and 754,873,713.8 hours of processing time to date. Despite its vast scale and complexity, Ranger has an uptime of 97 percent.

According to Jose Muñoz, deputy director in the Office of Cyberinfrastructure at the NSF, the integration of Ranger into the NSF TeraGrid significantly changed the high-performance computing landscape, nationally and globally.

“Ranger enabled the open science and engineering communities to address challenging problems in areas such as astrophysics, climate and weather, and earth mantle convection at unprecedented scales,” Muñoz said. “Ranger truly was a vanguard in NSF’s “Path to Petascale” program and is a testament to what can be done when ‘thinking out of the box.'”

When Ranger arrived two years ago, it provided nearly an order of magnitude leap in performance over the most powerful NSF supercomputer, according to Omar Ghattas, a co-principal investigator on the project, and director of the Center for Computational Geosciences at the Institute for Computational and Engineering Sciences and professor of Geological Sciences and Mechanical Engineering at The University of Texas at Austin.

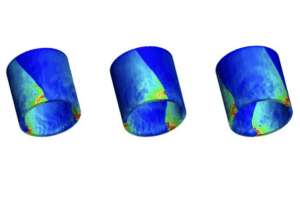

“Ranger has been instrumental for our NSF PetaApps mantle convection modeling project, a collaboration between UT Austin and Caltech,” Ghattas said. “We used Ranger to carry out the first global mantle convection simulations that resolve kilometer scale dynamics at plate boundaries. These simulations have helped to improve our understanding of detailed geodynamic phenomena and have yielded excellent fits with tectonic plate motion data.”

The lessons learned deploying Ranger have made a major impact on widely used open source software technologies such as the InfiniBandTM software stack and subnet manager, Lustre, Open MPI, MVAPICH, and Sun Grid Engine.

“These software packages have been significantly improved because of the Ranger project, and are now downloaded by people building clusters in other places. Thus, the Ranger project has had a huge impact on other clusters and the science done on those clusters around the world,” Boisseau said.

Ranger also has increased functionality through the integration of Spur, a high-end remote visualization system tied directly into Ranger’s InfiniBandTM network and file system. Researchers can perform high-end computing and scientific visualization at a massive scale on Ranger using Spur, without having to move their data to another system.

“The system matured as the users matured,” said Karl Schulz, a co-principal investigator on the Ranger project and director of the Application Collaboration group at TACC. “Now, researchers routinely run on 16,000 cores, and we have several research groups running on more than 40,000 cores and up to 60,000 cores.”

“With 123 terabytes of total memory, problems that ran on Ranger when it first entered full production could not be run anywhere else. In the scientific discovery process, having a substantial amount of total memory can be compared to getting access to a telescope that can see farther and has higher resolution,” said Schulz. “You change what you think you’re interested in.”

Ghattas said, “Ranger’s enormous speed and memory drove computational scientists to rethink their underlying models, data structures and algorithms so that their codes could, for the first time, capitalize on the tens of thousands of processor cores provided by a new generation of systems such as Ranger. In this sense, Ranger has served as the gateway to the petascale era for the computational science community.”

Two years into its four-year life-cycle, Ranger is in the best part of its life, Boisseau said. “It’s no longer a question as to whether Linux clusters can scale to petaflops. Ranger is a robust and powerful science instrument. We designed it with large memory as well as large compute capability so it would be a general purpose system, and it now enables scientific research across fields and domains.”

Boisseau said future plans include improving the performance of the archival storage system, exploring more data-intensive computing projects, and adding more fast storage in the disk system.

“We’ve done a lot of good work over the previous two years and have constantly increased the focus of the science getting done as we resolved the technology improvements. Ranger will be a key and integral part of science and discovery well into the future.”

Ranger is a collaboration among TACC, The University of Texas at Austin’s Institute for Computational and Engineering Sciences (ICES), Sun Microsystems, Advanced Micro Devices, Arizona State University and Cornell University. The $59 million award from the National Science Foundation covers the system and four years of operating costs.

The TeraGrid, sponsored by the National Science Foundation Office of Cyberinfrastructure, is a partnership of people, resources and services that enables discovery in U.S. science and engineering. Through coordinated policy, grid software, and high-performance network connections, the TeraGrid integrates a distributed set of high-capability computational, data-management and visualization resources to make research more productive. With Science Gateway collaborations and education programs, the TeraGrid also connects and broadens scientific communities.