Longhorn, the largest hardware-accelerated interactive visualization cluster in the world located at the Texas Advanced Computing Center (TACC), is providing researchers with unprecedented visualization capabilities and enabling them to effectively analyze ever-growing volumes of data.

Longhorn was awarded as part of the National Science Foundation’s (NSF) eXtreme Digital (XD) program, the next phase in the NSF’s ongoing effort to build a cyberinfrastructure that delivers high-end digital services to researchers and educators.

Since its debut in January 2010, users have been ramping up their projects on Longhorn. Currently, there are 470 active projects on the system and more than 38,000 jobs have been completed.

The system’s total peak performance in CPUs (central processing units) is 20.7 teraflops, and in GPUs (graphics processing units) is 500 teraflops. Total peak rendering performance is 154 billion triangles per second, total memory is 13.5 terabytes and total disk is captured in a 210 terabyte global file system.

While the GPU capacity reaches close to Ranger’s 579.4 teraflops, Longhorn is different in two ways. First, each node on Longhorn contains two GPUs. Second, the memory per core for Longhorn is four times greater than that of typical high-performance computing clusters. The ample shared memory available at each node makes it effective to concurrently process a large amount of data.

“We like to push the envelope at TACC-this system is the first of its kind,” said Kelly Gaither, principal investigator and director of Data and Information Analysis. “We’re committed to providing excellent user support and effective training to enable transformative science on this resource.”

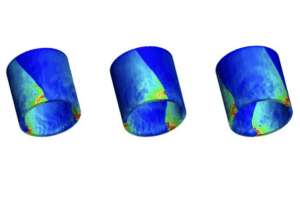

Thomas Fogal, a software engineer at the University of Utah’s Scientific Computing and Imaging Institute, is using Longhorn to study volume rendering, a technique that allows researchers to understand three-dimensional structures using two-dimensional images. Fogal said doctors might use volume rendering to understand where a tumor sits in relation to vascular structure, or a chemical engineer might evaluate a simulation result to derive the best chemical mixture for high fuel economy.

“Longhorn is an impressive machine,” Fogal said. “Using 256 GPUs, we volume rendered data larger than two terabytes, which is among the largest-ever published volume renderings. Such renderings would normally require hundreds of thousands of CPU cores otherwise. Our success was in major part due to the large number of GPUs available, in addition to TACC’s helpful staff that aided us in accessing them for our visualization work.”

“It is my hope that our results will demonstrate the capability of such a system, and encourage systems with similar architectural characteristics to become prevalent in the future,” Fogal said.

For very large data sets, interactive visualization is an effective way to convey information and insights in the data to scientists in the various scientific domains.

“Dealing with increasingly large data sets is a common challenge in every scientific field,” said Joydeep Ghosh, the Schlumberger Centennial Chaired Professor, Department of Electrical and Computer Engineering at The University of Texas at Austin. “We’re investigating data mining as a way to better facilitate the analysis and visualization of large scale scientific data.”

David LeBard, a postdoctoral research fellow at the Institute for Computational Molecular Science at Temple University, is using Longhorn to research electron transfer in proteins to derive a better understanding of the physical properties driving photosynthesis and metabolism.

“Longhorn is a useful tool for large-scale molecular simulation and analysis,” LeBard said. “The low-frequency motions we’re interested in are more easily accessible, and the analysis required to understand the physics is now tractable with a GPU cluster like Longhorn.”

To help simplify job submissions, management and connection procedures, the TACC team developed the Longhorn Visualization Portal. Through the portal, users can visualize their data and view their allocations. In addition, the EnVision visualization system is available through the Longhorn portal. EnVision provides a full set of visualization methods through a simple wizard-based interface.

“The portal is designed to lower barriers to entry and serves as a means for outreach to those communities that do not have significant experience using visualization tools,” Gaither said. “We’re continually updating the portal to improve its capabilities and usability.”

Longhorn is available via the National Science Foundation TeraGrid (www.teragrid.org) along with TACC’s other compute and visualization resources: Ranger, Lonestar and Spur.

Longhorn operations are supported by the Texas Advanced Computing Center (TACC) at The University of Texas at Austin. TACC is also partnering with the Scientific Computing and Imaging Institute at the University of Utah, the Purdue Regional Visual Analytics Center, the Data Analysis Services Group at the National Center for Atmospheric Research, the University of California Davis and the Southeastern Universities Research Association.

About TeraGrid

TeraGrid, sponsored by the National Science Foundation Office of Cyberinfrastructure, is a partnership of people, resources and services that enables discovery in U.S. science and engineering. Through coordinated policy, grid software, and high-performance network connections, TeraGrid integrates a distributed set of high-capability computational, data-management, and visualization resources to make research more productive. With Science Gateway collaborations and education programs, TeraGrid also connects and broadens scientific communities. TeraGrid resources include more than two petaflops of combined computing capability and more than 50 petabytes of online and archival data storage from 11 resource provider sites across the nation.