Artificial Intelligence has inspired countless novels and movies portraying its possible effects on society. Most portrayals have been extreme: either overly optimistic like “The Jetsons” or terrifyingly negative like “The Terminator.” For most of us, new AI technologies have the potential to improve lives in many ways, but they also bring real risks if not deployed with care. A mechanism to make sure the benefits outweigh the risks is needed urgently.

The White House recently released guiding principles for regulating artificial intelligence. They encourage private sector innovation while cautioning against a head-in-the-sand approach to technological development that could make dystopian fiction come to life. This is a great start. However, they largely overlook two critical risks: that AI technologies could dramatically increase economic inequality, and that we need explicit international regulatory coordination.

AI policy in the U.S. has lagged far behind that of other countries, but during the past several months, things have improved markedly. In particular, the memorandum, issued by the White House, hits (almost) all the right points. The memo’s 10 “Principles for the Stewardship of AI Applications” advocates a “light-touch regulatory approach,” calling for regulation only when existing statutes are insufficient for a specifically identified purpose. It also articulates nicely (and appropriately) that the risks of regulation ought to be carefully balanced against possible benefits.

But these principles fall short in two ways. First, they don’t pay enough attention to AI’s economically divisive potential. AI can make people more productive and efficient, but only those with access to these technologies (along with the computational resources and large-scale data that fuel them).

For example, if intelligent tutoring systems are available only in English, non-English speakers will be at a large disadvantage. AI applications can also be used in ways that are anticompetitive, such as suppressing marketing campaigns of competitors on one’s platforms.

One of the biggest risks of AI technologies is that their profits could accrue in the coffers of a small number of companies with access to the right combination of algorithms, computation and data (despite the fact that much of the data is generated by their users), which could widen the gap between the “haves” and the “have-nots” to the point that a peaceful society becomes unsustainable. Regulatory agencies must urgently consider the effects of their actions (or inactions) with respect to the long-term distribution of wealth.

Second, the memo includes a brief section on “International Regulatory Cooperation,” but the emphasis is on making sure that “American companies are not disadvantaged by the United States’ regulatory regime.” What’s overlooked is that many AI applications are deployed globally, so inconsistencies among countries’ regulatory requirements can themselves become a barrier to innovation.

Whenever high-level policy objectives are aligned across borders, agencies ought to do everything possible to align the details of those regulations as well. For example, despite the current lack of regulations, American companies still have to comply with Europe’s General Data Protection Regulations if they want to be accessible there. New U.S. regulations ought to be easily compatible with policies from other countries — at least those with similar ideals.

If we end up with an inconsistent morass of international regulations, complying with them will place a particular burden on small companies, thus stifling innovation. Given the memorandum’s focus on encouraging innovation, this omission is unfortunate.

These two oversights notwithstanding, the memorandum has many redeeming qualities. I am especially encouraged to see an acknowledgement of the potential value of nonregulatory steps, such as sponsoring pilot programs to help policymakers better understand AI, as well as encouraging and participating in the creation of voluntary consensus standards. Such standards must be monitored for adherence and sufficiency of scope, but they’re an important part of the standards ecosystem that ought to be actively coordinated with government actions.

If the government refines these principles to recognize the potential for dramatically increasing economic inequality as well as the need for international regulatory coordination with regard to AI technologies, the memorandum will be a fantastic step forward for providing appropriate guidance to governmental agencies and for codifying a national AI policy in the U.S.

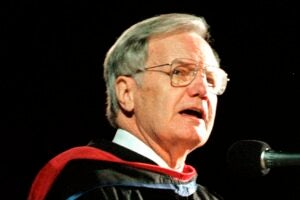

Peter Stone is the David Bruton Jr. Centennial Professor of Computer Science at The University of Texas at Austin, the executive director of Sony AI America, and chair of the Standing Committee of the One Hundred Year Study on AI (AI100).

A version of this op-ed appeared in The Hill.