The odds of getting colorectal cancer in America are 1 in 25 for women and 1 in 23 for men. Polyps, which are precursors to cancer, can be found and removed with colonoscopies — 15 million of which are performed in the U.S. annually. Colonoscopies are also critical to the diagnosis of Crohn’s disease, ulcerative colitis, and other colon and rectal diseases.

About 30% of all polyps are precancerous growths called adenomas, but the detection rate of adenomas varies from 7-60%. This difference in adenoma detection may be due to endoscopist technique, suboptimal colon clearing for the procedure, or tricky polyp location in the colon that some endoscopists may miss. It is also important that the whole adenoma is removed as any remaining adenoma tissue may grow into cancerous tumor.

Three professors at UT in different disciplines have come together in hopes of drastically improving the hunt for adenomas and other colorectal disease: one a surgeon at Dell Med, one in mechanical engineering, and one in electrical engineering. And they’re deploying artificial intelligence in the hunt.

Joga Ivatury, associate professor and chief of colorectal surgery at UT’s Dell Medical School, is partnering with Farshid Alambeigi, an assistant professor of mechanical engineering, on one project and Radu Marculescu, a professor of electrical and computer engineering, on another. They hope AI will help doctors detect polyps they can’t find even with the best current technology. “Farshid is the brains behind one operation. Radu is the brains behind the other operation. I’m merely the colorectal surgeon,” Ivatury demures. Alambeigi came to UT in 2019 from Johns Hopkins and Marculescu in 2020 from Carnegie Mellon. Ivatury trained in San Antonio and came to UT in 2021 after stints at Minnesota and Dartmouth.

Cross-campus collaborations such as these were exactly the hope when UT, a longtime research powerhouse especially strong in both engineering and computer science, founded Dell Medical School a decade ago.

“Some AI for adenoma detection already exists,” Ivatury says, but it doesn’t appear to have moved the needle in terms of identifying the smaller polyps. It identifies the adenomas that most endoscopists can already see. What we’re trying to do is to get the smaller ones and the ones that are very subtle by looking at each colonoscopy image pixel by pixel. Our AI algorithm is learning exactly where the adenoma is and drawing a very precise line around it. This ensures the adenoma is identified and is completely removed. The pixel-based segmentation that Radu is an expert in really can allow that,” Ivatury says. Ph.D. student Mostafijur Rahman assists Marculescu in this image segmentation.

Seeing a polyp with an endoscope is currently the only way of evaluating one, and the endoscopist sees only a limited front view. But what if an endoscopist could also “feel” the colon lining or potential polyp before deciding whether to remove it? Ivatury and mechanical engineer Alambeigi are developing a device that will allow doctors to do just that with an inflatable tactile sensor.

In the near future, once an endoscopist sees a polyp with the camera, they will move a donut-shaped balloon, two inches in diameter, that fits like a collar around the camera, over the suspicious area. Then, with a joystick device, they will inflate the donut, either with air or liquid, and the donut, partially covered with a sensor, will tell the endoscopist whether the area is hard or soft, rough or smooth. (In general, hard or rough is bad.) The tactile sensor acts as a virtual fingertip on any surface. The sensor in turn generates its own image, and so its full name is vision-based tactile sensor.

Ivatury and Alambeigi have received a grant from UT’s IC2 Institute to develop the tactile sensor and hope that within five years it could be in clinical trials. Meanwhile, Ivatury and Marculescu have received a Texas Catalyst Grant from UT and Dell Med to pursue their research on the use of AI in colonoscopy.

In both projects — the visual (Marculescu) and the tactile (Alambeigi) — AI will be doing three things:

First, it is learning. Ivatury thinks of the challenge in terms of yard work. “It’s like this large lawn, and we need to take out only the weeds. What we’re doing is training the system with all the different possible types of weeds, different yards, telling it: ‘This is a weed; this is grass.’” These “pictures” in the analogy are data from two sources: colonoscopy images of Ivatury’s at Dell Med, and publicly available health data sets. “What we’re doing now is getting pictures of real yards and real weeds, pulling those weeds and making sure they’re truly weeds based on pathology,” he says.

“We’re going to use that sensor on real pathology from my surgical specimens, my patients.” The diversity of the patients coming through the door at Dell Seton (Dell Med’s teaching hospital) and UT Health Austin (Dell Med’s clinical/outpatient arm) puts the research much farther ahead. “Because our patient population at Dell Med is a diverse population, we know not only that the patient has this disease process, we know if they’re male or female, white Caucasian, Hispanic, African American, Asian. We can ensure that our input data set is balanced in terms of race, gender, age, ethnicity, as well as disease process,” says Ivatury.

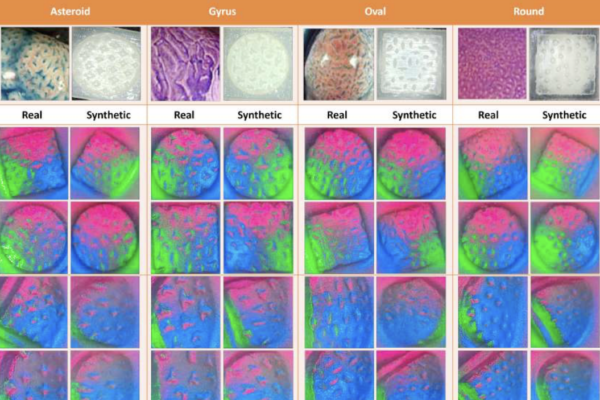

Second, AI will take what it has learned from real samples and extrapolate to create a larger database of AI-generated ones. Think of this as uploading hundreds of pictures of yards with and without weeds, then creating thousands of other pictures that will teach the machine to identify them in any possible scenario with regard to angle, lighting and the surrounding topography of the colon, which is tricky to say the least.

Once you have enough data from real life — either visual data from the camera or from the tactile sensor, AI can then make up “phantom data.” If you have 50 diverse patients, it can create phantom patients with cancer, Crohn’s disease, etc. In our lawns-and-weeds analogy, Marculescu says synthetic data generation is like creating multiple lawns in different contexts such as different shaded areas or viewing angles. “The idea would be for some of them to be hard to catch,” he adds.

“I don’t need to do this for 10,000 patients to ensure we have all the balance,” says Ivatury. “AI can extrapolate from the data we put in to create a diverse group of ‘phantom’ patients that in turn are used to train the algorithm.” Once he gets enough data, he can use the synthetic data generation to create as many yards as needed with every combination of weeds, and some yards with no weeds, so that by the time it gets to a real patient, the algorithm has seen most if not all combinations. And as it continues to see more patients, it will gather more data and continue to improve.

Marculescu says synthetic data generation, used to compensate for a scarcity of data, will be “hugely impactful if done properly.” He likens synthetic data generation to taking practice tests in the hope that when you take the real test, you’ll do better on it. Getting a colonoscopy is a traumatic, complicated procedure, he says, so people are not going to voluntarily line up to have them for the sake of creating a larger AI database. Scarcity of data in the medical domain will always be a problem, he says, because it is usually invasive.

In general, the larger the sample size the more likely the algorithm is to detect a polyp. But as with most applications of AI, users must be on guard against bias. “Bias is a huge issue in the medical field,” Marculescu says. How do we guarantee that data and machine learning are not biasing one gender over another or one race or ethnicity over another? And bias is not only or even mainly a matter of race or gender: There are many types of polyps, two of which are very common. If we are using a small sample size to train the algorithm, then it will become biased toward the two common types making it more likely to miss the rarer types.

Extrapolation from a diverse set of real-life cases is a case of using AI to overcome a problem inherent to AI — bias. If they can use AI to create synthetic images that are as realistic as actual images, then those can be used to teach the algorithm in a more fulsome way, thus mitigating that bias. Alambeigi says, “We are proving that synthetic images are as good as real images.”

Finally, AI will assist in the procedure. AI during a colonoscopy will control for the differences in the often complicated contours of even a healthy colon, for the lighting, and, in instances when the colon is not completely clean, for obscuring.

When scientists started benchmarking AI’s ability to classify objects, the error rate was astronomical, 30-40%, according to Marculescu. But by 2014, AI had surpassed humans’ vision, when the error became less than 3.5%. As remarkable as this is, Marculescu says, “The reality is that even that result should be taken with a grain of salt because dealing with patients is not the same thing as dealing with images of cats and dogs and trucks and cars and images that are well lit.”

The difference between sick and healthy tissue can be extremely subtle. Since the new AI-assisted imaging is designed to work at the pixel level (the tiniest unit of a video display), it should help endoscopists during the procedure. “In real time, it’s going to work in the background while you’re having your colonoscopy because if it doesn’t, then what’s the point of doing it?” asks Ivatury. “We need to be able to say, ‘Hey, look over there, there’s something suspicious. You need to check that out, you should see how it feels and maybe take it out.’”

Part of Alambeigi’s excitement about AI is that it can help reduce the subjective nature of exams so that less experienced endoscopists have the same “decision cover.” Another use of AI is that it can use the data to create more realistic simulators (think flight simulators) for doctor training. Lastly, through AI language models, doctors can have dialogues with the system by prompting it with voice commands to control the camera view such as zooming in and zooming out and conceivably even asking questions about what the system is seeing.

Moreover, the implications of this work in no way stop at walls of the colon but are transferable to innumerable other areas of medicine where visual information is key. When it comes to surgery, whether dental implants or brain surgery, surgeons nearly always use an aid to help them see more closely. AI, says Marculescu, is simply one more such tool.